WorldView-3: Super Satellite’s Most Crucial Tech Stays On The Ground

Posted on

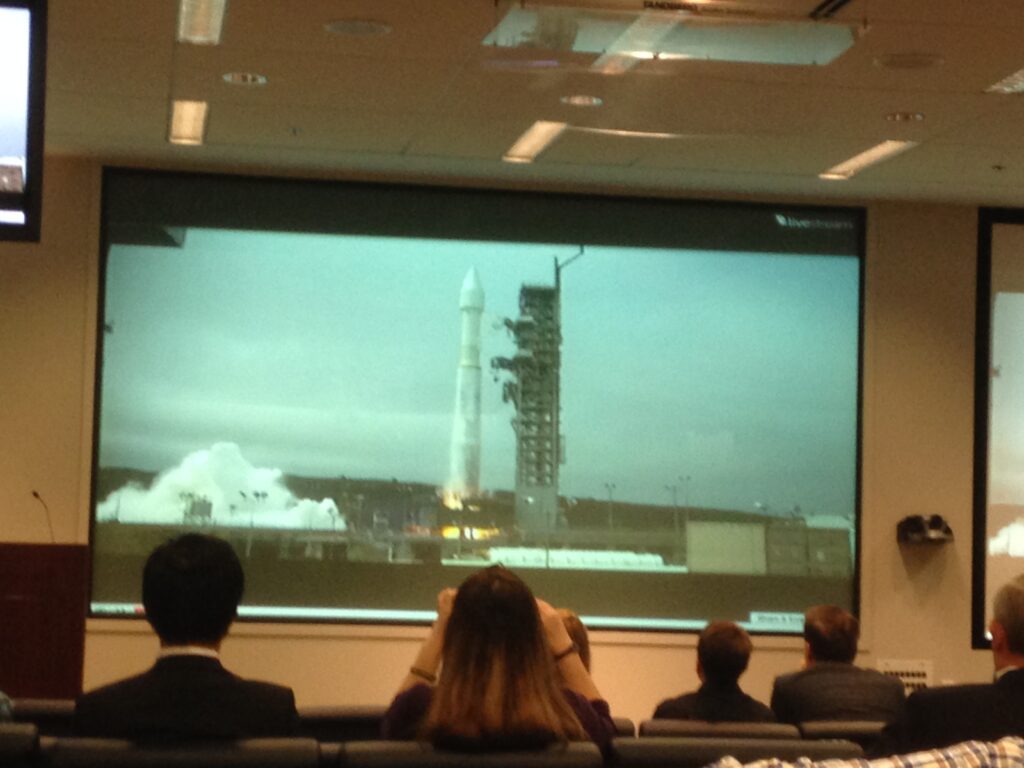

Lockheed Martin employees and guests watch their Atlas V rocket launch the WorldView-3 satellite on Wednesday.

[UPDATED: WorldView-3 launched successfully Wednesday from Vandenberg Air Force Base, California at 11:30 am Pacific time, 2:30 pm Eastern]

When DigitalGlobe’s WorldView-3 satellite soars skyward tomorrow – weather permitting – most attention will naturally be on the parts that go up. But the bus-sized imagery collection satellite is just the high-tech tip of an iceberg, some of whose most interesting and critical capabilities reside on the ground. DigitalGlobe is increasingly not just a space company, but an information technology company.

That’s not to say the launch itself is anything less than rocket science. There’s a 15-minute window every day to get the right trajectory, and as of the latest forecast there’s a 60 percent chance the weather won’t be clear tomorrow. And while the Boeing-Lockheed United Launch Alliance can boast 69 successful launches in a row – its great selling point over untried rival SpaceX – there’s always the chance that something could break down or blow up. But Atlas V rocket that ULA will use for WorldView-3 is an upgraded version of America’s first successful ICBM and has seen service since 1957. ULA owes its success record less to new rocket technology than to ever more careful tracking of pre-launch data from every component for any sign of problems – which is largely a data gathering and analysis problem.

Likewise, it’s data gathering and analysis that are central to DigitalGlobe’s business, and while the satellites gather – WorldView-3 will offer 30-centimeter resolution, unprecedented in the civilian world, enough to make out individual manholes – the analysis largely happens on the ground. Without that step, a better satellite just allows you to drown the client in greater amounts of meaningless raw data: DigitalGlobe’s entire constellation can image an area half the size of the continental US – four million square kilometers – every day.

WorldView-3 alone can scan a band from Washington, DC to New York City in 45 seconds. It will look at every spot on the map through 28 different “bands,” from straightforward black-and-white to color to infrared to a band specifically designed to see through cirrus clouds. In fact, the point of having all these bands is to allow computers to sort all the different types of imagery, filter out obscuring factors like clouds or snow, correct the colors for atmospheric and lighting conditions so the same car (for example) looks the same in every shot regardless of when it was taken, and produce intelligible images of what the customer wants.

Once WorldView-3 is up and running, “we can normalize the imagery so automated image extraction on a global scale is possible,” said Kumar Navulur, DigitalGlobe’s director of next generation products. The company’s algorithms can assess the health of specific trees in an orchard, he told reporters. They can find “all the football fields in Colorado” or, for that matter, in the United States.

Navulur’s wording implied there’d be false positives for human analysts to weed out, but nonetheless that kind of automatic pattern recognition could save massive time and effort making the first cut. His national security-related examples were about tracking refugees in Sudan and the Iranian nuclear reactor complex at Bushehr, but a Polish reporter noted her government is relying on DigitalGlobe for imagery of the current Ukraine crisis. Imagine if someone asked this kind of algorithm to find, not football stadiums, but anti-aircraft missile launchers of the kind that shot down Malaysian Airlines flight MH17, or Iraqi Army artillery now in the hands of the so-called Islamic State. Presumably US intelligence agencies already have this kind of capability – we hope – but now, for good or ill, it will be much more widely available.

When Malaysian Airlines flight 370 went down in March, DigitalGlobe actually provided imagery of potential crash sites online and crowdsourced the hunt for potential pieces of wreckage to volunteers – over eight million of them, Navulur said. “The human eye, the human brain is very powerful,” he said. But for clients that can’t or shouldn’t muster millions of volunteers – which is most of them, especially on the military and intelligence side of space – what’s critical is automated pattern recognition, much of it borrowed from 3D computer games.

“We don’t want our customers to be in the IT business,” said Navulur, “so we’ve invested in cloud computing [and analysis capabilities to] give them information rather than data….. When we have the data and the memory, we can actually do millions and trillions of operations very quickly.”

Subscribe to our newsletter

Promotions, new products and sales. Directly to your inbox.