Air Combat Commander Doesn’t Trust Project Maven’s Artificial Intelligence — Yet

Posted on

Before the Air Force will trust AI to pick out targets, Gen. Holmes said, it has to get smarter than a human three-year-old.

ATLAS: Killer Robot? No. Virtual Crewman? Yes.

Posted on

Alarming headlines to the contrary, the US Army isn’t building robotic “killing machines.” What they really want artificial intelligence to do in combat is much more interesting.

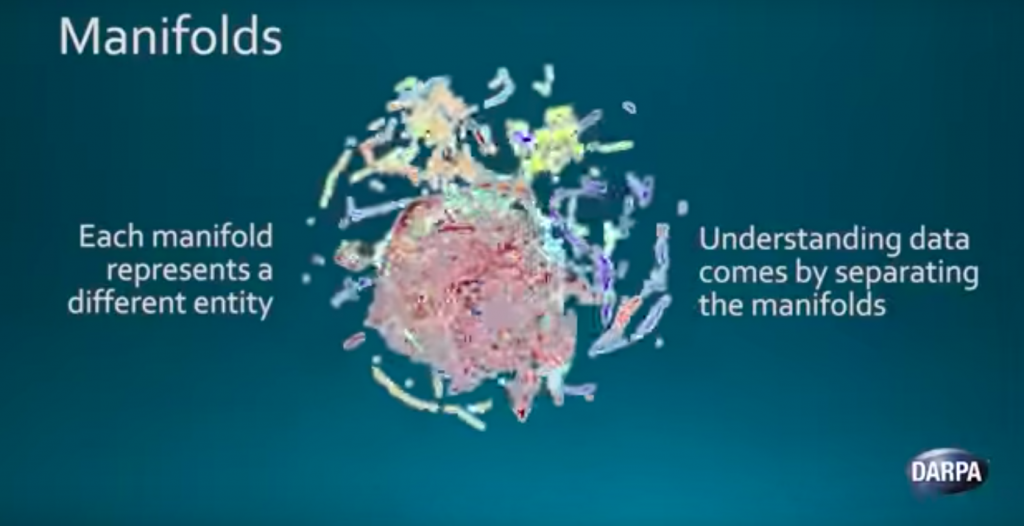

Attacking Artificial Intelligence: How To Trick The Enemy

Posted on

“Autonomy may look like an Achilles’ heel, and in a lot of ways it is” – but for both sides, DTRA’s Nick Wager said. “I think that’s as much opportunity as that is vulnerability. We are good at this… and we can be better than the threat.”

Show Me The Data: The Pentagon’s Two-Pronged AI Plan

Posted on

“It’s not going to be just about , ’how do we get a lot of data?’” deputy undersecretary Lisa Porter told the House subcommittee. “It’s going to be about, how do we develop algorithms that don’t need as much data? How do we develop algorithms that we trust?”

Why A ‘Human In The Loop’ Can’t Control AI: Richard Danzig

Posted on

“Error is as important as malevolence,” Richard Danzig told me in an interview. “I probably wouldn’t use the word ‘stupidity,’ (because) the people who make these mistakes are frequently quite smart, (but) it’s so complex and the technologies are so opaque that there’s a limit to our understanding.”

Artificial Stupidity: Learning To Trust Artificial Intelligence (Sometimes)

Posted on

In science fiction and real life alike, there are plenty of horror stories where humans trust artificial intelligence too much. They range from letting the fictional SkyNet control our nuclear weapons to letting Patriots shoot down friendly planes or letting Tesla Autopilot crash into a truck. At the same time, though, there’s also a danger… Keep reading →

Artificial Stupidity: Fumbling The Handoff From AI To Human Control

Posted on

Science fiction taught us to fear smart machines we can’t control. But reality should teach us to fear smart machines that need us to take control when we’re not ready. From Patriot missiles to Tesla cars to Airbus jets, automated systems have killed human beings, not out of malice, but because the humans operating them… Keep reading →

Artificial Stupidity: When Artificial Intelligence + Human = Disaster

Posted on

APPLIED PHYSICS LABORATORY: Futurists worry about artificial intelligence becoming too intelligent for humanity’s good. Here and now, however, artificial intelligence can be dangerously dumb. When complacent humans become over-reliant on dumb AI, people can die. The lethal track record goes from the Tesla Autopilot crash last year, to the Air France 447 disaster that killed 228… Keep reading →