Swarm 2: The Navy’s Robotic Hive Mind

Posted on

Robot boats are getting smarter fast. Two years ago, on the James River, the Office of Naval Research dropped jaws with a “swarm” of 13 unmanned craft that could detect threats and react to them without human intervention. This fall, on the Chesapeake Bay, ONR tested ro-boats with dramatically upgraded software. The Navy called this experiment “Swarm 2” — but a better description would be “Hive Mind.”

In the 2014 test, the 13 boats exchanged sensor data and came to a consensus on what they saw. That kind of “common operating picture” is hard to program: If the software can’t figure out when two sensors are seeing the same thing from different angles, it will see double like a drunk man. So getting this data sharing to work was a feat in itself. But in ’14, once the swarmboats pooled perceptions collectively, each boat determined its course of action individually.

In many situations, this works okay: The robots all have the same programming, so they’ll react the same way to the same situation, for example by converging on threats like a swarm of angry bees — hence the term “swarming behavior.” But swarming isn’t planning, and there’s a real risk your high-tech robots will start acting like a little kids’ soccer team: Everyone runs after the ball at once, nobody guards the goal. At worst, all the robots go after the first threat they see, only to let the next one right past them.

US Navy experimental unmanned boat.

So in the two years between the experiments, Robert Brizzolara and his team developed software that let the boats come up with a plan together and allocate tasks. Instead of a swarm of insects, they progressed to something like Star Trek‘s Borg Collective. That enabled the robots to make a plan, come up with a division of labor, and even hold forces in reserve.

“We had some runs in the demonstration where we had two bad guys come in instead of one,” Brizzolara told reporters this afternoon. The four robot boats in the experiment would detect the first threat and assign one of their number to shadow it, while the other three stayed on patrol; when the second threat arrived, another robot went after it, and the last two stayed on patrol. With the 2014 software, he said, they might all have just gone after the first target.

In some ways, this year’s experiment seems less ambitious than 2014’s: It involves fewer unmanned boats — four instead of 13 — conducting a less dramatic mission — protecting a fixed harbor area instead of escorting a moving ship. But within this smaller scope, the depth and complexity of autonomous behavior was much greater.

The hive mind-like ability for the robots to plan together isn’t the only advance in this year’s experiment. The software now includes a “behavior engine” that allows programmers to create a whole library of complex patterns of action. Boats with the 2014 software were capable of just two behaviors: escort (a friendly ship) and attack (a threat). Boats with the 2016 software have four: patrol (an area), classify (a vessel as friendly or hostile), track (a contact with sensors), and trail (i.e. shadow a suspect vessel). Looking forward, the behavior engine approach should also make the software much easier to upgrade with new behaviors.

Eventually, Brizzolara envisions the boats being able to conduct a wide range of different missions, with each mission software package able to pick and choose useful behaviors for the situation from a large library. This approach should allow many different types of vessels to use the same software modules, reducing programming costs; already ONR’s CARACaS software works on more than 15 types of small craft, and components of the program are installed on the world’s largest unmanned vessel, the Sea Hunter.

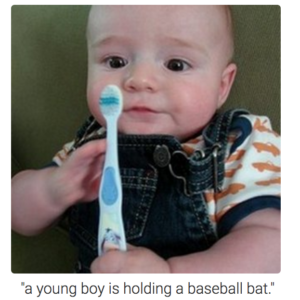

An example of the shortcomings of artificial intelligence when it comes to image recognition. (Andrej Karpathy, Li Fei-Fei, Stanford University)

The third advance this year is in automated target recognition. That’s always a difficult programming challenge. Computers don’t benefit from millions of years of evolution to refine their perceptual systems, and even when programmers use machine learning to teach the software how to recognize different objects out of vast libraries, the algorithms are sometimes ludicrously wrong. DARPA director Arati Prabhakar’s favorite example is an otherwise sophisticated program that identified a baby with a toothbrush as boy with baseball bat.

Now add to these general challenges the very specific problems of the maritime environment, where both the sensor and the target are jostled unpredictably by the waves, with rain and fog and humid air often distorting the image. Brizzolara’s team looked at automated target recognition algorithms from other projects, he said, but in the end they had to find a solution specifically programmed for watercraft.

For this exercise, the robot boats were taught to recognize different types of “enemy” and “friendly” watercraft: The former triggered the “track” and “trail” behaviors — and could have triggered “attack” if that behavior module had been loaded — while the latter were let pass. A human operator supervising the boats could override them and manually designate a contact as friend or foe, but as long as the robots were doing the right thing, the human only had to watch.

This level of autonomy is a stark contrast to first-generation drones like the famous Predator, which are human-piloted aircraft whose pilot just doesn’t happen to be onboard. The Predator’s remote-control model requires a lot of uninterrupted bandwidth to handle all the constant, detailed feedback between pilot and drone. ONR’s robot boats, by contrast, were deliberately designed to use as little bandwidth as possible, either to communicate with their human overseers or with each other. As potential adversaries grow ever more capable of disrupting US networks or shutting them down altogether, robots grow ever more capable of fighting on regardless.

Subscribe to our newsletter

Promotions, new products and sales. Directly to your inbox.