Should Pentagon Let Robots Kill Humans? Maybe

Posted on

A Punisher unmanned ground vehicle follows a soldier during the PACMAN-I experiment in Hawaii.

Imagine battles unfolding faster than the human mind can handle, with artificial intelligences choosing their tactics and targets largely on their own. The former four-star commander in Afghanistan, John Allen, and an artificial intelligence entrepreneur, Amir Husain, have teamed up to develop a concept for what they call “hyperwar,” rolled out in the July issue of Proceedings. It’s an intriguing take on the quest for the AI edge we here at Breaking Defense have called the War Algorithm — but their article leaves some crucial questions unanswered.

Gen. John Allen (now retired) on active duty as a Marine

So I spoke with both men (separately) to ask them: Is this a realistic vision, or does it ignore Clausewitzian constants like fog of war and friction? What’s the place of humans in this future force? And, most urgently, does the sheer speed of hyperwar require us to delegate to robots the decisions about what human beings to kill?

This Terminator Conundrum, as Joint Chiefs Vice-Chairman Paul Selva calls it, is so fraught that the previous Secretary of Defense, Ash Carter, said bluntly the US will “never” give autonomous systems authority to kill without human approval. It’s so fraught, in fact, that the two co-authors give distinctly different answers, albeit not incompatible ones.

“The reality is machines (already) and will continue to kill people autonomously,” Husain told me. The automated Phalanx Gatling guns that defend Navy warships, for example, will shoot down manned aircraft as well as missiles. What’s more, the Navy’s entire Aegis air and missile defense system has a rarely used automatic mode that will prioritize and fire on targets, manned and unmanned, without human intervention.

Amir Husain

Other countries such as Russia and China have no qualms about fielding such systems on a large scale. “We need to accept the fact that our hand in this matter will be forced, that near-peer countries are already investing (in such systems),” Husain said. If adversaries can act faster because they don’t slow their system down for moral scruples, he said, “what option do you really have?”

Instead of pausing to consult a human mind, Husain argues, we need to program morality into the artificial intelligences themselves, both individual AIs and, even more important, the whole network. If one robot begins acting badly, whether through faulty programming or enemy hacking, the rest of the network needs to be able to disable and if necessary destroy it, he said, just as society takes action when an individual human violates generally accepted ethics.

General Allen, as a career Marine, is naturally more focused on the human role and more cautious about the role of AI. “We’ve always exercised restraint in the employment of military force,” said Allen, who commanded forces in Afghanistan under strict Rules Of Engagement (ROE) to protect civilian lives. “Our enemies have never been constrained by that, but that will not change for us in a hyperwar environment,” he said. So while hyperwar requires delegating many technical and tactical decisions to AI, he said, “the human must retain that aspect of warfare which is inherently the moral dimension, which is the taking of human life.”

“That doesn’t mean that the human has to pull the trigger,” Allen continued. While we won’t unleash autonomous systems to fire at will across the battlespace, he said, he could envision humans designating a “killbox” known to contain only enemy forces and giving AIs free reign to kill humans in that specific place for a specific time.

Confirming such a kill zone is free of civilians, though, is much easier in warfare at sea or in the air than in combat on land. (It’s worth noting the article appears in the Proceedings of the US Naval Institute, not in, say, the Army War College’s Parameters). Allen and Husain envision seeding an urban area with a wide variety of sensors — electro-optical, infra-red, acoustic, seismic, radio rangefinding, and so on — that all report back to the network, where intelligence analysis AIs fuse all these different perspectives into one coherent, actionable picture.

A soldier holds a PD-100 mini-drone during the PACMAN-I experiment in Hawaii.

Once the network finds a target, Husain added, the weapons we launch at it can be much more precise, as well. “It’s actually going to be much safer than the way warfare is waged today,” he said. Don’t imagine robotic versions of present day weapons, he said — a pilotless attack helicopter, an uncrewed battle tank, a self-firing artillery piece — but rather swarms of drones “as small as a bee.” These low-end robots would not be Terminators but basically guided bullets, able to take out a single terrorist or other target with less chance of collateral damage than a human rifleman today.

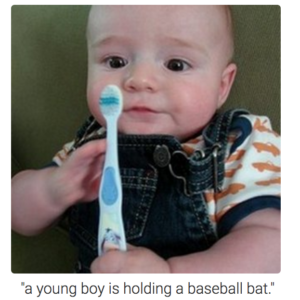

That said, it’s no use if you precisely take out the wrong target, so the AI’s ability to know what they’re shooting at is crucial. Research in object recognition is making great strides, but AIs still make mistakes, and when they do, they’re often baffling to a human observer. Former DARPA director Arati Prabhakar’s favorite example was an AI that labeled a photo of a baby holding a toothbrush as “a young boy is holding a baseball bat.”

An example of the shortcomings of artificial intelligence when it comes to image recognition. (Andrej Karpathy, Li Fei-Fei, Stanford University)

Allen and Husain seem confident these problems of machine vision can be solved, in large part by cross-checking different types of sensor data on the same target. For example, in the 1999 Kosovo campaign, Serbian forces fooled NATO airstrikes into targeting decoy tanks with sun-heated water inside to simulate the heat of an engine — but radar would have seen right through them.

“The massive infusion of data from across multiple sensors…will create a very high level of confidence with respect to target identification,” Allen told me.

The problem with these arguments is I heard many of them 16 years ago, from the apostles of the Revolution in Military Affairs, aka RMA, aka “transformation.” They promised new technology would allow “lifting the fog of war,” as former Vice-Chairman of the Joint Chiefs William Owens entitled his book on the subject. This promise wasn’t fully realized in the 2003 invasion of Iraq, when sandstorms and simple surprise let Iraqi forces sneak up on US units, and it fell apart completely in the guerrilla warfare that followed, as Allen knows firsthand.

A young Marine reaches out for a hand-launched drone.

Allen and Husain explicitly talk about hyperwar as a “revolution in military affairs.” In fact, they argue it is merely the military manifestation of a “revolution in human affairs” that will affect all aspects of society. They talk about the potential for “perfectly coordinated” military action. Are they falling into the same trap as Admiral Owens?

Allen assured me he hasn’t forgotten his Clausewitz. “You’ll never do away with fog and friction because the nature of warfare is (fundamentally) human,” Allen said, “but you can change the character of war through advances in technology.”

“Artificial intelligence gives a relative advantage over your opponent by giving you a level of clarity — it’s never perfect; you’re never going to have perfect clarity as a commander — but…a level of clarity that can give you a higher confidence with respect to your decision making,” Allen continued.

Which brings us back to the human dimension after all. “This is going to be require that we train and educate our young officers — and our leaders and our more senior folks — to think in different ways (and) to be comfortable in that environment of fast moving decision-making,” Allen said. Rather than clumsily force the AIs to pause while the human catches up, or unleash the AIs without human supervision, the goal is a symbiosis, Allen said, where “the human is still in the loop, but the human has not slowed down the loop.”

Subscribe to our newsletter

Promotions, new products and sales. Directly to your inbox.