Robot Brains Where & When You Want ‘Em

Posted on

DARPA Persistent Close Air Support (PCAS) system by Raytheon

Classic science fiction imagined evil master computers remote-controlling their mindless robot minions. It imagined good-guy droids that were basically humans in tin suits. But as the actual science of autonomy evolves, reality is looking a lot weirder.

The user interface may be in an ordinary Android tablet, but the artificial intelligence itself may reside in a pod under an airplane’s wing, in a ground station directing a distant drone or in a processor strapped to a soldier. Or the electronic brain maybe everywhere and nowhere at once, with the tasks of thinking distributed across multiple locations in a network. That flexibility is central to Deputy Defense Secretary Bob Work’s vision of the Third Offset Strategy, in which artificial intelligence and human creativity combine, like a centaur, to create an enduring technological advantage for US forces.

Robert Work

In essence, software is a payload. Once you get the algorithms to work in one place — on an A-10 jet or a V-22 tiltrotor, for example — you can repackage them relatively easily to work somewhere else. That includes the algorithms for autonomy.

We’re “developing different autonomy services that can be hosted at different locations,” said Raytheon senior engineering fellow David Bossert. Using Defense Department open standards and a “service-oriented architecture,” the overall autonomy package consists of multiple optional modules that can be tailored to the mission and the platform. The user gets information and issues commands with an Android, but the interface software on the tablet can be either the Air Force-developed Android Tactical Assault Kit (ATAK) or the Marine Corps KILSWITCH .

That approach allowed Raytheon to port its Persistent Close Air Support (PCAS) software from an Air Force jet to a Marine tilt-rotor, an Army helicopter, and ultimately an Unmanned Aerial Vehicle, he said. “Even though we had some of the PCAS services on an A-10 and then a MV-22 and an AH-64, we were able to take them and put them on a UAV,” Bossert told me. Now Raytheon is re-repackaging PCAS for the infantry as part of DARPA’s Squad-X program.

On the A-10, the PCAS computer and its communications kit were housed in a pod under the wing. There wasn’t room to do that on the much smaller MQ-1C Grey Eagle, the Army variant of the famous Predator, Bossert said, so “all the autonomy and computing resources [were] hosted in the ground control stations.” In Squad-X, he continued, “each soldier will have a processor,” as well as each Unmanned Ground Vehicle (UGV).

“The beauty about this is it’s not necessarily hardwired like an aircraft bus,” he said. “It’s actually distributed so you can do this over the radio.” Different parts of the computer “brain” can be in different physical locations.

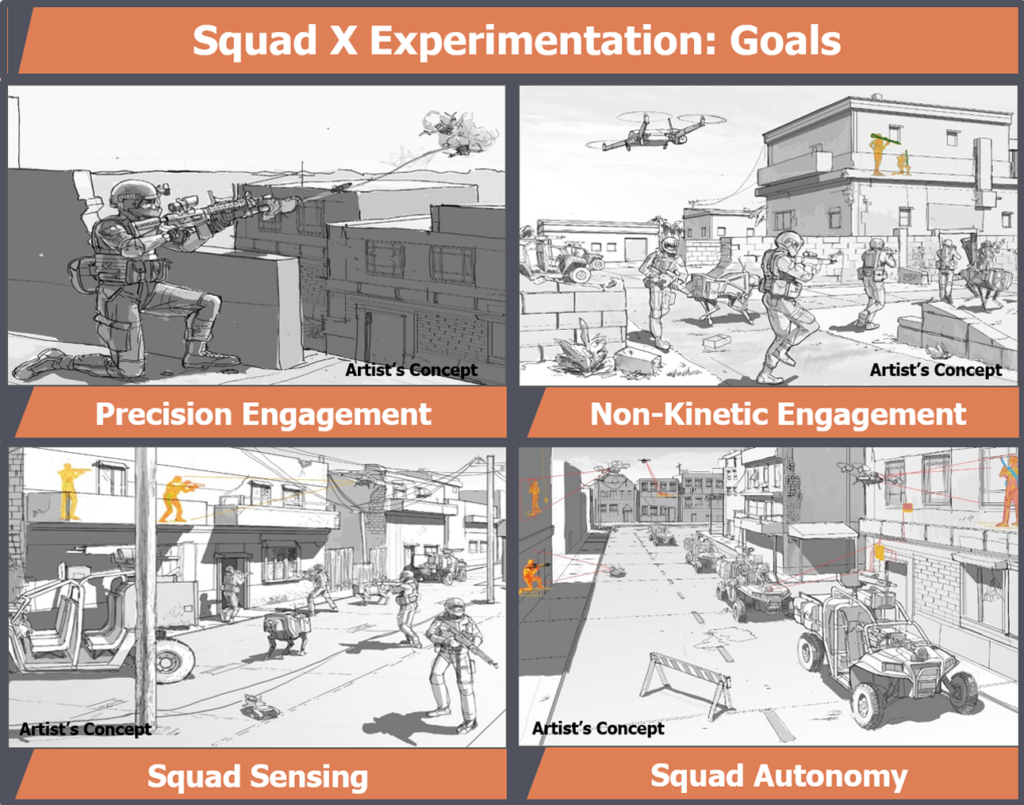

DARPA Squad-X focus areas

Such distributed systems will be particularly useful for the DARPA Squad-X initiative, which aims to bring the information age — networks, smart weapons, robotics — to the blood-and-mud world of the infantry squad. Raytheon is handling the autonomy portion of the project. It would be horrendously impractical to have one big computer brain in a pod or control station. How would the squad carry it? Where would they plug it in? So Squad-X will use lots of little brains, linked together as one mind via radio in a wireless network, building on current Army systems like Nett Warrior.

That network will be designed to be resilient against hacking, jamming, and mundane interference, Bossert said, but the software has to keep working even when the network goes down. One lesson from the PCAS project is “it’s not if the radio drops out, it’s when the radio drops out,” he said. “I’m going to lose comms when I least expect it.”

Raytheon isn’t just applying the lessons of PCAS to Squad-X: It’s actually building its software for the one on the foundation of the other. Both are DARPA projects involving autonomy, but the details were very different.

An A-10 “Warthog” firing its infamous 30 mm gun.

For PCAS, said Bossert, “our only metric [of success] was to decrease the timeline for Close Air Support [CAS] from about 30 minutes, [given] an A-10 that was 20 nautical miles away… down to six minutes or less,” which essentially is the time it took the A-10 to cover the 20-mile distance. The PCAS software streamlined the process of calling in an airstrike to the point where all the planning was done during that six-minute transit. The software transmitted target information from the forward observer to the pilot, outlined a route for the attack run, and suggested the best weapon to destroy the target with minimum collateral damage.

Squad-X is much more complex. First of all, for robots, operations on the ground — a cluttered, messy environment full of both stationary and moving objects — are much more complex than operations in relatively empty domain of air. Second, Squad-X envisions much more than calling in air support. Its software has to control mini-drones and Unmanned Ground Vehicles (UGVs), integrate data from long-range sensors, and update soldiers on the tactical situation and do it all without relying on GPS.

Just fusing all the disparate data can be tricky. A human driving a car and talking on a cellphone will show up on optical sensors, acoustic sensors, and radio-frequency sensors, for example, so you need software that can figure out all three contacts represent the same real-world object, not three different things. “There are lots of correlation engines out there that do pretty well,” said Bossert, but getting them to work in a realistic tactical environment is “going to be a big part of the experimentation.”

Then there’s the robotics aspect. Rather than remotely piloted robots like the Predator or bomb-squad bots, the Squad-X robots are supposed to figure out their own course of action in accordance with human commands. Those commands will initially be delivered over the Android but may one day come by voice and gesture. “Commercial industry’s helping us quite a bit” on this challenge, Bossert said, pointing to Google’s extensive work on self-driving cars.

Clearly there’s a tremendous amount of work to be done, but there’s tremendous potential as well. When it comes to unmanned systems, said the head of Raytheon’s advanced missile systems unit, Thomas Bussing, “we’re not technology-limited; we’re more comfort-limited.”

“The systems that we have today could do a lot more,” Bussing said, if we had the imagination and daring to exploit them.

Subscribe to our newsletter

Promotions, new products and sales. Directly to your inbox.