Do Young Humans + Artificial Intelligence = Cybersecurity?

Posted on

West Point cadets conduct a cyber exercise.

WASHINGTON: The Army is recruiting smart young soldiers to wage cyber war. But human talent is not enough. Ultimately, say experts, cyberspace is so vast, so complex, so constantly changing that only artificial intelligence can keep up. America can’t prevail in cyberspace through superior numbers. We could never match China hacker for hacker. So our best shot might be an elite corps of genius hackers whose impact is multiplied by automation.

Lt. Gen. Paul Nakasone

Talent definitely matters – and it is not distributed equally. “Our best (coders) are 50 to 100 times better than their peers,” Lt. Gen. Paul Nakasone, head of Army Cyber Command (ARCYBER), said. There’s no other military profession, from snipers to pilots to submariners, that has such a divide between the best and the rest, he told last week’s International Conference on Cyber Conflict (CyberCon), co-sponsored by the US Army and NATO. One of the major lessons learned from the last 18 months standing up elite Cyber Protection Teams, he said, is the importance of this kind of “super-empowered individual.”

Such super-hackers, of course, exist in the civilian world as well. One young man who goes by the handle Loki “over the course of a weekend…found zero-day vulnerabilities, vulnerabilities no one else had found in Google Chrome, Internet Explorer and Apple Safari,” Carnegie Melon CyLab director David Brumley said. “This guy could own 80 percent of all browsers running today.” Fortunately, Loki’s one of the good guys, so he reported the vulnerabilities – and got paid for it – instead of exploiting them.

David Brumley

The strategic problem with relying on human beings, however, is simple. We don’t have enough of them. “We don’t want to be in a person-on-person battle because, you know what, it just doesn’t scale,” Brumley told CyCon. “The US has six percent of the world’s population (actually 4.4). Other countries, other coalitions of countries are going to have more people, (including) more people like Loki.”

That creates a strategic imperative for automation: software programs that can detect vulnerabilities and ideally even patch them without human intervention. Brumley’s startup, ForAllSecure, created just such a program, called Mayhem, that won DARPA’s 2016 Cyber Grand Challenge against other automated cyber-attack and defense software. However, that contest was held under artificial conditions, Brumley said, and Mayhem lost against skilled human hackers – although it found some kinds of bugs better and faster. So automation may not be entirely ready for the real world yet.

Even when cybersecurity automation does come of age, Brumley said, we’ll still need those elite humans. “What these top hackers are able to do… is come up with new ways of attacking problems that the computer wasn’t programmed to do,” he said. ” I don’t think computers or autonomous systems are going to replace humans; I think they’re going to augment them. They’re going to allow the human to be free to explore these creative pursuits.”

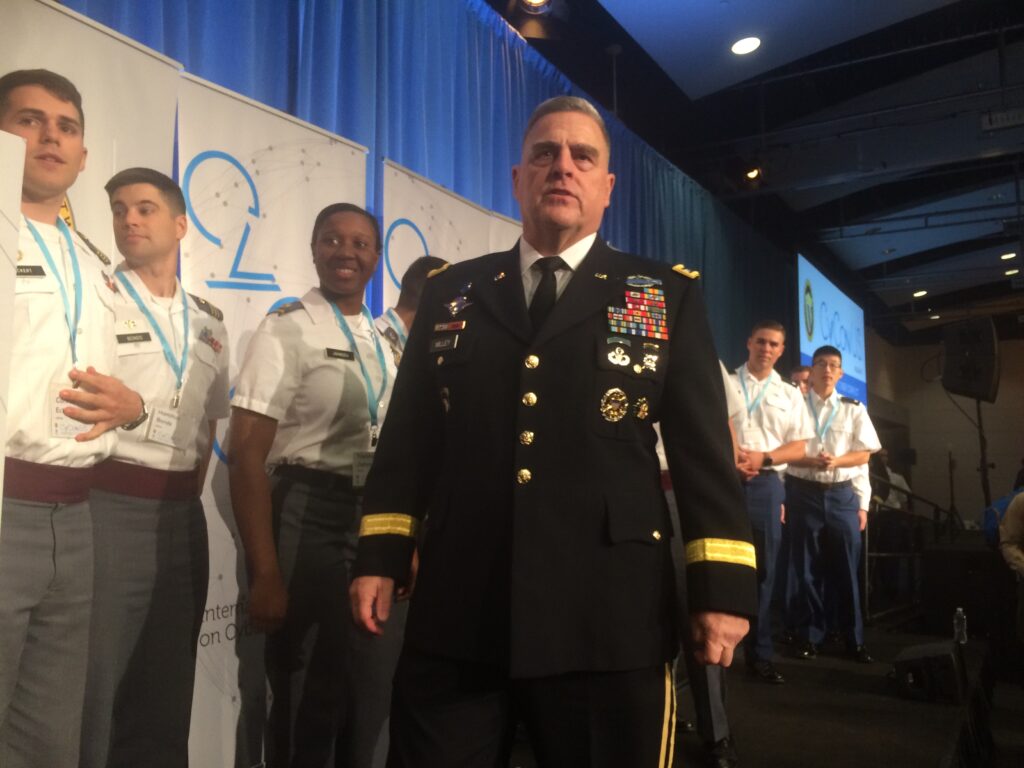

Gen. Mark Milley, Army Chief of Staff, with cadets and young Army officers at the CyberCon cybersecurity conference.

Young Humans

“For those of you who are in the military who are 25 years old or younger, captains and below…you’re going to have to lead the way. People my age do not have the answers,” the Army’s Chief of Staff said at CyberCon. After his speech, Gen. Mark Milley called up to the stage lieutenants and West Point cadets – but not captains, he joked, “you’re getting too old.” (He let the captains come too).

“It’s very interesting to command an organization where the true talent and brainpower is certainly not at the top, but is at the beginning stages,” said Lt. Gen. Nakasone at the same event. “It’s the lieutenants. It’s the sergeants. It’s the young captains.”

SOURCE: US Army Cyber Command

The Army has rapidly grown its cyber force. It now has 8,920 uniformed cyber soldiers, almost a ninefold increase since a year ago (and cyber only became an official branch three years ago, when it had just six officers). There are also 5,231 Army civilians, 3,814 US contractors, and 788 local nationals around the world. All told, “there’s 19,000 of them,” Milley said. “I suspect it’s gonna get a lot bigger.”

At the most elite level, US Cyber Command officially certified the Army’s 41 active-component Cyber Protection Teams and the Navy’s 40 teams as reaching Full Operational Capability this fall, a year ahead of schedule. (We’re awaiting word on the Air Force’s 39). At full strength, the teams will total about 6,200 people, a mix of troops, government civilians, and contractors.

To speed up recruiting, Gen. Milley wants to bring in cyber experts at a higher rank than fresh-out-of-ROTC second lieutenants – say, as captains. Such “direct commissioning” is used today for doctors, lawyers, and chaplains, but Milley notes it was used much more extensively in World War II, notably to staff the famous Office of Strategic Services (OSS). Why not revive that model? “There’s some bonafide brilliant dudes out there. We ought to try to get them, even if it’s only 24 months, 36 months,” he said. “They’re so rich we won’t even have to pay ’em.”

(That last line got a big laugh, as intended, but “dollar-a-year men” have served their country before, including during the World Wars.)

No matter how much the military improves recruiting, however, it will probably have enough talent in-house. (Neither will business, which is short an estimated two million cyber professionals short worldwide). So how does the military tap into outside talent?

One method widely used in the commercial world is bug bounties: paying freelance hackers like Loki for every unique vulnerability they report. (Note that the Chinese military runs much of its hacking this way.) The Defense Department has run three bounty programs in the last year – Hack the Pentagon, Hack the Army, and Hack the Air Force – that found roughly 500 bugs and paid out $300,000. That’s “millions” less than traditional security approaches, says HackerOne, which ran the programs.

One method widely used in the commercial world is bug bounties: paying freelance hackers like Loki for every unique vulnerability they report. (Note that the Chinese military runs much of its hacking this way.) The Defense Department has run three bounty programs in the last year – Hack the Pentagon, Hack the Army, and Hack the Air Force – that found roughly 500 bugs and paid out $300,000. That’s “millions” less than traditional security approaches, says HackerOne, which ran the programs.

What’s really striking, though, is the almost 3,000 bugs that people have reported for free. Historically, the Pentagon made it almost impossible for white-hack hackers to report bugs they find, but a Vulnerability Disclosure Policy created alongside the bug bounties “has been widely successful beyond anyone’s best expectation,” said HackerOne co-founder Alex Rice, “without any actual monetary component.”

So what’s motivating people to report? For some it’s patriotism, Rice told me, but participating hackers come from more than 50 countries. In many cases, he said, hackers are motivated by the thrill of the challenge, the delight of solving a puzzle, the prestige of saying they “hacked the Pentagon,” or just a genuine desire to do good.

The other big advantage of outsourcing security this way, said Rice, is the volunteer hackers test your system in many more different ways than any one security contractor could afford to do. “Every single model, every single tool, every single scanner has slightly different strengths, but also slightly different blind spots,” Rice said. “One of the things that is so incredibly powerful about this model is that every researcher brings a slightly different methodology and a slightly different toolset to the problem.”

Those toolsets increasingly include automation and artificial intelligence.

Seven autonomous cybersecurity systems face off for the DARPA Cyber Grand Challenge in 2016.

Automation & AI

“I’m the bad news guy,” Vinton Cerf, co-inventor of the Internet, told the audience at CyCon. “We’re losing this battle (for) safety, privacy, and security in cyberspace.”

Why? “The fundamental reason we have this problem is we have really bad programming tools,” Cerf said. “We don’t have software that helps us identify mistakes that we make…..What I want is a piece of software that’s watching what I’m doing while I’m programming. Imagine it’s sitting on my shoulder, and I’m typing away, and it says ‘you just created a buffer overflow.'” (That’s a common mistake that lets hackers see data beyond the buffer zones they’re authorized for, as in the Heartbleed hack.)

Vinton Cerf

Such an automated code-checker doesn’t require some far-future artificial intelligence. Cerf says there are new programming languages such as TLA+ and COQ that address at least parts of the problem already. Both use what are called “formal methods” or “formal analysis” to define and test software rigorously and mathematically. There are also semi-automated ways to check a system’s cybersecurity, such as “fuzzing” – essentially, automatically generating random inputs to see if they can make a program crash.

Artificial intelligence doesn’t have to be cutting-edge to be useful. The Mayhem program that won DARPA’s Cyber Grand Challenge, for instance, “did require some amount of AI, but we did not use a huge machine learning (system),” Brumley said. “In fact, NVIDIA called us up and offered their latest GPUs, but we had no use for them.” Mayhem’s main weapon, he said, was “hardcore formal analysis.”

“There is a lot of potential in this area, but we are in the very, very early stages of true artificial intelligence and machine learning,” HackerOne’s Rice told me. “Our tools for detection have gotten very, very good at flagging things that might be a problem. All of the existing automation today lags pretty significantly today on assessing if it’s actually a problem. Almost all of them are plagued with false positives that still require a human to go through and assess (if) it’s actually a vulnerability.”

So automation can increasingly take on the grunt work, replacing legions of human workers – but we still need highly skilled humans to see problems and solutions that computers can’t.

Subscribe to our newsletter

Promotions, new products and sales. Directly to your inbox.