DARPA, Army Test Optionally Manned Helicopter (It’s Not A.I.)

Posted on

DARPA and Lockheed have modified an off-the-shelf commercial helicopter to fly itself in typical military missions, including supply runs, medevac, and recon. The next step: Port the MATRIX software over to an actual Army UH-60 Black Hawk, which will fly next year. The long run: Design next-generation scout and assault aircraft to be optionally piloted from the start.

For safety reasons, there were highly trained test pilots aboard the Sikorsky Autonomy Research Aircraft, a modified S-76B, during this month’s flight tests at Fort Eustis, Va. — but for much of the time, they might as well have stayed on the ground. In one case, Sikorsky autonomy director Igor Cherepinsky told reporters this afternoon, “we also had a non-pilot with all of 45 minutes of training take the aircraft up and operate for almost an hour.”

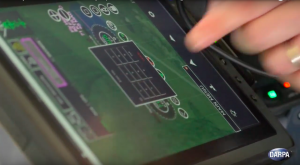

How? The humans just tapped on a tablet screen to issue some basic instructions — take off, fly here, land around there — that the computer then executed itself. During simulated pickups of cargo and casualties, a loadmaster on the ground could use a tablet to give directions as well, with the computer mediating between the input from the human on the ground and the human onboard. Even when the trained pilots wanted more precise input, instead of having to use both hands and both feet on traditional helicopter controls, they just used a joystick-like “inceptor” to direct the computer, which turned their broad commands into specific adjustments of the controls.

Optionally Manned, Not Unmanned

The goal is not to replace human operators altogether, but to give them a computerized co-pilot that can take over routine tasks at the touch of a button.

“It’s really like a co-pilot,” DARPA program manager Graham Drozeski enthuses in the video above. “It can fly routes, it can plan routes, it can execute emergency procedures — and it can do all that perfectly.”

While such an optionally manned aircraft might fly some low-risk missions entirely by computer, such as a supply run through friendly airspace, flights with passengers or into combat zones would have at least one human pilot and often two. The goal of what’s called human-machine teaming is to get the best of both worlds, a synergy some scholars liken to the mythical centaur.

That way trained human brains can focus on mission planning, military tactics, and other complex problems full of the ambiguity and unknowns that computers just don’t handle well. Meanwhile the machine makes sure they don’t fly into something in a moment of misjudgment or distraction. Such Controlled Flight Into Terrain is historically the No. 1 cause of fatal aircraft crashes, because humans are bad at the kind of relentlessly uninterrupted attention to physical details that computers find easy.

In fact, DARPA calls this technology ALIAS, the Aircrew Labor In-Cockpit Automation System, to emphasize how it assists rather than supplants the human pilots. Sikorsky — now part of Lockheed Martin — calls it MATRIX, which doesn’t stand for anything but sounds cool.

A Lockheed Martin press release said SARA demonstrated four critical capabilities:

- “Automated Take Off and Landing: The helicopter autonomously executed take-off, traveled to its destination, and autonomously landed

- “Obstacle Avoidance: The helicopter’s LIDAR and cameras enabled it to detect and avoid unknown objects such as wires, towers and moving vehicles

- “Automatic Landing Zone Selection: The helicopter’s LIDAR sensors determined a safe landing zone

- “Contour Flight: The helicopter flew low to the ground and behind trees.”

“Fort Eustis allowed us to operate this aircraft at tree tops, in close proximity to wires, and do all sorts of interesting things we couldn’t just do at airports or any other facility,” Cherepinsky said.

It’s Not AI

We’ve covered Sikorsky’s work on optionally manned aircraft for years now, and Cherepinsky has been emphatic that their computerized copilot is not “artificial intelligence.” At least, it’s not AI in the modern sense of the word.

Why? The latest machine learning algorithms can churn through vast amounts of data to produce surprising flashes of insight, but they can also leap to the wrong conclusions. On social media, that might just mean a chatbot starts spewing racist epithets, but it could lead to a military intelligence AI confusing civilians and targets — something hard enough for humans — or to an aircraft AI flying into a mountain.

Worse, for an engineering point of view, whether the AI right or wrong, it’s impossible for humans to understand how they came to their conclusions.

So Sikorsky deliberately went with old-school “deterministic” software, where a given input always generates the same output. That allowed them to build confidence the system would always behave predictably, allowing them to submit it to rigorous safety certification protocols.

Cherepinsky’s team also deliberately designed the computer co-pilot to be always at least partially on. Unlike a conventional autopilot, which is either on or off, MATRIX is always checking on the human crew, even when they take full manual control, and can take over at any time if they’re about to crash. Depending on the mission, the circumstances, and their personal comfort level, the human operators can let the computer take on more or fewer tasks from moment to moment.

This month’s demonstration at Fort Eustis was the first time Army pilots have operated the MATRIX aircraft, as opposed to Sikorsky employees. Getting their feedback was a top priority for these flights, DARPA’s Drozeski said, and he was stuck by how quickly the Army’s senior pilot grew comfortable using the more highly autonomous modes.

“The big takeaway,” Cherepinsky said, “is the fluid interaction.”

Subscribe to our newsletter

Promotions, new products and sales. Directly to your inbox.