Army Wrestles With Testers Over Network Upgrades

Posted on

Army soldiers try out an early version of the new Command Post Computing Environment (CPCE)

FORT MYER: A forthcoming Pentagon test report will pan key parts of the Army’s new battlefield command network, two generals warned me this week. But they say the Director of Operational Test & Evaluation (DOT&E) is drawing on data that is months out of date and doesn’t reflect subsequent upgrades to the software.

In fact, they said, the new Command Post Computing Environment (CPCE) — meant to start fielding to operational units later this year — already shows big improvements over the often-cumbersome systems today’s commanders and staffs must use.

Maj. Gen. David Bassett

The problem is with the testing process itself, Major Generals David Bassett and Peter Gallagher told me here. At issue is not just this particular program, but the much wider question of how a Pentagon testing apparatus designed for big industrial age programs can keep up with the much faster and more fluid upgrade cycles of information technology.

“You’re not going to make dramatic change to hardware that quickly; the software can change dramatically in a relatively short period of time,” said Bassett, the Army’s Program Executive Officer for Command, Control, & Communications – Tactical (PEO C3T). “We’ve added functionality that wasn’t even there three months ago that today we think addresses many of the shortcomings that were identified in the early tests.”

Army Command Post Computing Environment (CPCE)

Perfect Vs. Better

“There are commanders out there asking for this stuff yesterday,” said Gallagher, who heads the Army-wide task force (called a Cross Functional Team) that pulls together scientists, soldiers, engineers, and, yes, testers to improve the service’s networks.

“Division commanders are saying, ‘yes, we want this system out here and we want to run it through the gauntlet of a warfighter exercise'” — a high-stakes wargame in which units are normally reluctant to try anything unproven. “We did the same thing with XVIII Airborne Corps last year and III Corps this year….There’s been some really good feedback.”

Maj. Gen. Peter Gallagher

The early iterations of CPCE did show “mixed results,” Gallagher acknowledged. While the test version offered dramatic improvements in such areas as sharing data with allies, he said, it struggled to scale up to larger operations, and future versions will take a new approach to network operations (NETOPS). But the whole point of the exercise was to identify such problems so the program could fix them quickly.

DOT&E, however, doesn’t account for that iterative test-learn-fix-test cycle, Bassett lamented. Instead, it’s set up to give what are basically binary pass/fail grades: Operational Effective or Not Operationally Effective, Operationally Suitable or Not Operationally Suitable. What’s more, it scores a new weapons program against its own declared objectives — the desired future end state — rather than against the existing systems it’s supposed to replace — the current reality.

“Often the choice is between something that we know isn’t perfect and something in the field that we know is even worse,” Bassett said. “We’re really trying to get at more effective, more suitable, more survivable, and those just aren’t words that are used in the evaluations that we receive today.” The goal, he argued, is “to not make perfect the enemy of better.”

The upgraded M109A7 Paladin fires during a test in Yuma, Arizona

Bassett ran into this problem in a previous life as the Army’s Program Executive Officer for combat vehicles. Back then, the Pentagon Inspector General rapped his effort to modernize the M109 Paladin howitzer because the new fire suppression system couldn’t put out burning propellant if the ammunition storage got hit. That report neglected two key details: “There was no substance on earth that could put out that propellant,” Bassett said vehemently, and the vehicle had no fire extinguisher at all before the upgrade.

Then DOT&E declared in 2017 that the upgraded M109 Paladin as a whole was “not operationally effective and not operationally suitable,” largely because the gun still couldn’t fire a certain kind of shell. But — as the DOT&E report acknowledged a paragraph later — “the program was never intended to upgrade the gun,” Bassett seethed, only the turret mechanisms and the chassis.

“Is there some scar tissue here?” Gallagher asked with a chuckle, watching Bassett’s blood pressure soar at the memory.

“I’m afraid we’re going to find ourselves in that same position on the network,” Bassett said. “We’re talking about an incremental plan to deliver capability every two years, really for the foreseeable future,” at least until the Army’s modernization target date of 2028. “We know that the first increment we deliver, [Capability] Set 21, will not deliver the network of 2028, but at the same time it’s a meaningful step in that direction.”

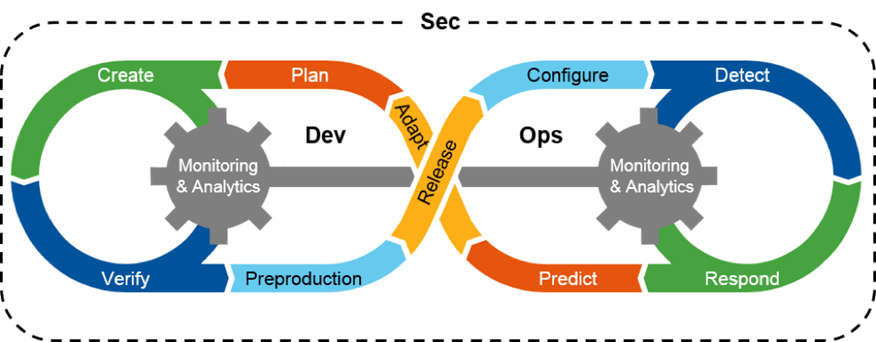

A diagram of the DevSecOps (Development – Security – Operations) model for developing software.

Fail Fast?

The perfect vs. better dilemma is particularly acute with modern software development, which embraces ideas like “fail fast.”

You bring your software developers, cybersecurity specialists, and the intended users/operators together from the start — a concept called DevSecOps — and quickly throw together a basic version — the “minimum viable product” — that you know is far from perfect but gives the users something to try out and give feedback on. Then you take that feedback, quickly fix shortfalls and add new features, and roll out the next iteration for testing.

Repeat ad infinitum because you’ve never truly done: Technology keeps changing, and changes so rapidly, that you never reach a perfected final product. Yes, there’s a formal test, Bassett and Gallagher said, but the version you take to test isn’t a final product, it’s just one iteration along the way.

Testing at Army Electronic Proving Ground at Fort Huachuca, Ariz.

The problem is that the the Pentagon testing bureaucracy — and the Congress that created it — are built around a much older imperative than “fail fast”: don’t fail. Law, regulation, and established process generally assume the program develops a final product, perfects it as much as possible, and only then lets the test community and the end users get their hands on it.

“When a test report is written, it’s written against the standard of the intended operational environment, and so you’ll see reports that will say ‘not effective,'” Bassett said. On the Command Post Computing Environment, he said, “you’re going to see a report coming out very soon that going to say exactly that, [but] it’s a snapshot in time.”

“It’s seven months old and we’ve iterated twice, three times since then,” Gallagher said. “We’re working with DOT&E… to try to map out, based on [their] recommendations, what kind of immediate developmental testing review can we do to prove…”

“How much is still true,” Bassett interjected.

“…we’re already attacked the problem,” Gallagher concluded.

With the new methodology, “I am incredibly pleased with the increase in the speed with which we’re improving these capabilities,” Bassett said. “We’re finding things faster, we’re fixing things faster, we’re turning those changes faster.”

“But that doesn’t always show up in the test report snapshot,” he said. “We’re really challenging some long held processes within the Department.”

Subscribe to our newsletter

Promotions, new products and sales. Directly to your inbox.