AI, Cybersecurity, & The Data Trap: You Don’t Know Normal

Posted on

BILLINGTON CYBERSECURITY SUMMIT: Artificial Intelligence has a big big-data problem when it comes to network defense, senior defense officials warned this afternoon.

Sure, AI can pounce on a problem in a microsecond, long before a human operator can react. All you need to do is train the machine-learning algorithms. So you let the AI prowl through every computer, smartphone, server, router, Internet connection, etc. until the algorithm learns what normal looks like on your network. Then the AI can sit back and watch every passing bit and byte, ready to jump on any anomalous activity from malware slipping in as an email attachment to a disgruntled employee downloading all your sensitive files, Edward Snowden-style.

Dean Souleles, chief technology advisor in the Office of the Director of National Intelligence (ODNI), at the Billington Cybersecurity Summit.

But here’s the problem with that scenario. How do you know what is right in the first place? In the worst case, what if, when your AI diligently learned what activity was “normal” in your system, the bad guys were already inside?

It’s a lot like walking through a rain forest, listening to a hundred kinds of bird, monkey, and frog as they sing and screech and squawk, hoping that you’ll hear the cacophonic chorus change when something dangerous starts to sneak up on you. Unless you understand that complex sound environment extremely well, you’re not going to detect the tiger coming before it eats you.

“A decade ago, when we talked about cybersecurity, we were probably talking about anti-virus definitions,” said Dean Souleles, the chief technology advisor in the Office of the Director of National Intelligence. But that relatively narrow problem has expanded into something vastly more complex, dynamic, and difficult to understand, he told the Billington cybersecurity conference here in DC: “Now, we’re talking about a living breathing ecosystem.

“How do I even know what’s normal and what’s abnormal so I can detect anomalies?” Souleles said. “We simply don’t know.” Instead of simply putting known malware on a blacklist, he said, modern cybersecurity has to be able to defeat “cyber attacks we can’t define.”

Lt. Gen. Jack Shanahan at the Billington conference

It’s the digital equivalent of the undiscovered species in the rain forest: Before you pick up that pretty frog, you’d better find out if its skin is poisonous.

Understanding the cyber jungle is even harder than teaching AI to understand the physical world, said Lt. Gen. Jack Shanahan, director of the Pentagon’s year-old Joint Artificial Intelligence Center.

“What does normal look like?” Shanahan asked. “If we’re trying to detect anomalous behavior, we have to know what the baseline is …. It’s much more challenging in cyber than in full-motion video or in predictive maintenance or even humanitarian assistance.”

At the now famous Project Maven, which applied AI to analyzing reconnaissance video of suspected terrorists, “we spent a lot of our time on the front end — object labeling, preparing the data — 80 percent,” recounted Shanahan, who used to run Maven. But at least they were looking through that data for real, physical objects whose appearance is clearly defined, he said. There’s even a publicly available database, ImageNet, with over 14 million images neatly tagged and organized — mostly by AI — in categories ranging from “amphibians” to “vehicles,” each with hundreds of subdivisions. But, Shanahan lamented, “there is not an ImageNet equivalent for cyber.”

It’s well-known what a human, a truck, or a missile launcher looks like, and human analysts can label images of each accordingly. A large set of clearly labeled, well-organized data points is what machine learning algorithms need to learn from, before they can try making sense of raw data the humans haven’t cleaned up for them.

But humans have no intuitive sense of what a computer virus looks like. They can’t smell unauthorized downloads or hear a user account improperly gain super-user access. They can’t even perceive what’s happening inside a computer network without using special tools. So when humans try to label cybersecurity data so machine learning algorithms can train on it, it may not be the blind leading the blind, but all parties involved are at least severely astigmatic.

The Defense Department is launching a major effort to clean up its cybersecurity data, Shanahan said. “Across the department, we have 24 cybersecurity service providers, all of whom are collecting data in slightly different ways, so our starting point is actually coming up with a cyber data framework,” he said. Cyber Command and the National Security Agency are leading the effort.

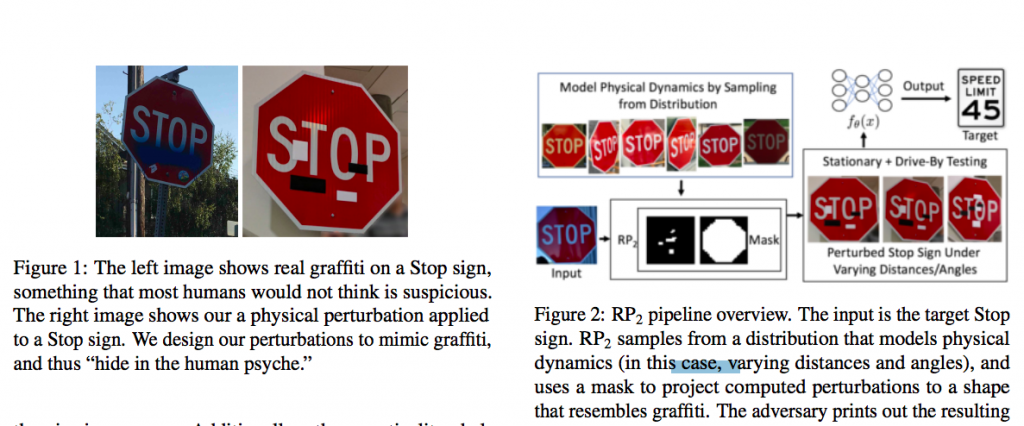

With strategically placed bits of tape, a team of AI researchers tricked self-driving cars into seeing a STOP sign as a speed limit sign instead.

But even if your data is cleaned up, properly formatted, and clearly tagged, how are you sure that it’s actually right? How are you sure it wasn’t deliberately manipulated or planted as part of an enemy’s deception strategy? That’s a particularly acute problem for artificial intelligence, because research into adversarial AI has discovered machine learning algorithms can be fooled by subtle distortions a human would not even notice, like strategically placed tape making a STOP sign look like a 45 MPH sign.

It’s not just cleaning up the data, said Lynne Parker, assistant director for AI at the White House’s Office of Science & Technology Policy. “It’s also determining whether or not you can trust it,” she said. “You may have perfectly good-looking data but in fact it may have been tampered with.”

Subscribe to our newsletter

Promotions, new products and sales. Directly to your inbox.